I’d previously written about property-based testing in Clojure. In this blog post I’d like to talk about how we can do the same in Python using the Hypothesis library. We’ll begin with a quick recap of what property-based testing is, and then dive head-first into writing some tests.

What is property-based testing?

Before we get into property-based testing, let’s talk about how we usually write tests. We provide a known input to the code under test, capture the resulting output, and write an assertion to check that it matches our expectations. This technique of writing tests is called example-based testing since we provide examples of inputs that the code has to work with.

While this technique works, there are some drawbacks to it. Example-based tests take longer to write since we need to come up with examples ourselves. Also, it’s possible to miss out on corner cases.

In contrast, property-based testing allows us to specify the properties of the code and test that they hold true under a wide range of inputs. For example, if we have a function f that takes an integer and performs some computation on it, a property-based test would test it for positive integers, negative integers, very large integers, and so on. These inputs are generated for us by the testing framework and we simply need to specify what kind of inputs we’re looking for.

Having briefly discussed what property-based testing is, let’s write some code.

Writing property-based tests

Testing a pure function

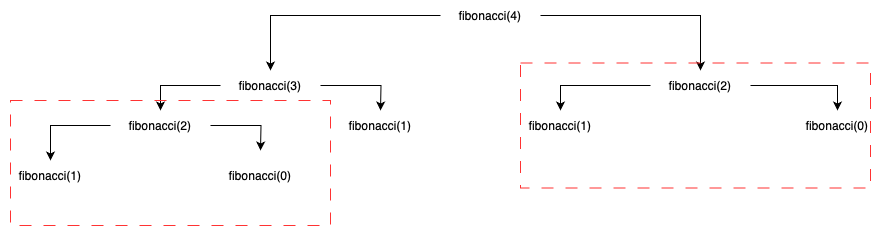

Let’s start with a function which computes the n’th Fibonacci number.

1 |

|

The sequence of numbers goes 0, 1, 1, 2, 3, etc. We can see that all of these numbers are greater than or equal to zero. Let’s write a property-based test to formalize this.

1 | from hypothesis import given, strategies as st |

In the code snippet above, we’ve wrapped our test using the @given decorator. This makes it a property-based test. The argument to the decorator is a search strategy. A search strategy generates random data of a given type for us. Here we’ve specified that we need integers. We can now run the test using pytest as follows.

1 | PYTHONPATH=. pytest . |

The test fails with the following summary.

1 | FAILED test/functions/test_fibonacci.py::test_fibonacci - ExceptionGroup: Hypothesis found 2 distinct failures. (2 sub-exceptions) |

When looking at the logs, we find that the first failure is because the maximum recursion depth is reached when the value of n is large.

1 | n = 453 |

The second failure is because the function returned a negative integer when the value of n is negative; in this case it is n=-1. This violates our assertion that the numbers in the Fibonacci sequence are non-negative.

1 | +---------------- 2 ---------------- |

To remedy the two failures above, we’ll add an assertion at the top of the function which will ensure that the input n is in some specified range. The updated function is given below.

1 |

|

We’ll update our test cases to reflect this change in code. The first test case checks the function when n is between 0 and 300.

1 |

|

The second case checks when n is large. In this case we check that the function raises an AssertionError.

1 |

|

Finally, we’ll check the function with negative values of n. Similar to the previous test case, we’ll check that the function raises an AssertionError.

1 |

|

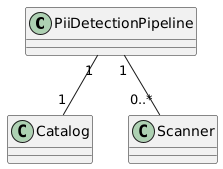

Testing persistent data

We’ll now use Hypothesis to generate data that we’d like to persist in the database. The snippet below shows a Person model with fields to store name and date of birth. The age property returns the current age of the person in years, and the MAX_AGE variable indicates that the maximum age we’d like to allow in the system is 120 years.

1 | class Person(peewee.Model): |

We’ll add a helper function to create Person instances as follows.

1 | def create(name: str, dob: datetime.date) -> Person: |

Like we did for the function which computes Fibonacci numbers, we’ll add a test case to formalize this expectation. This time we’re generating random names and dates of birth and passing them to the helper function.

1 |

|

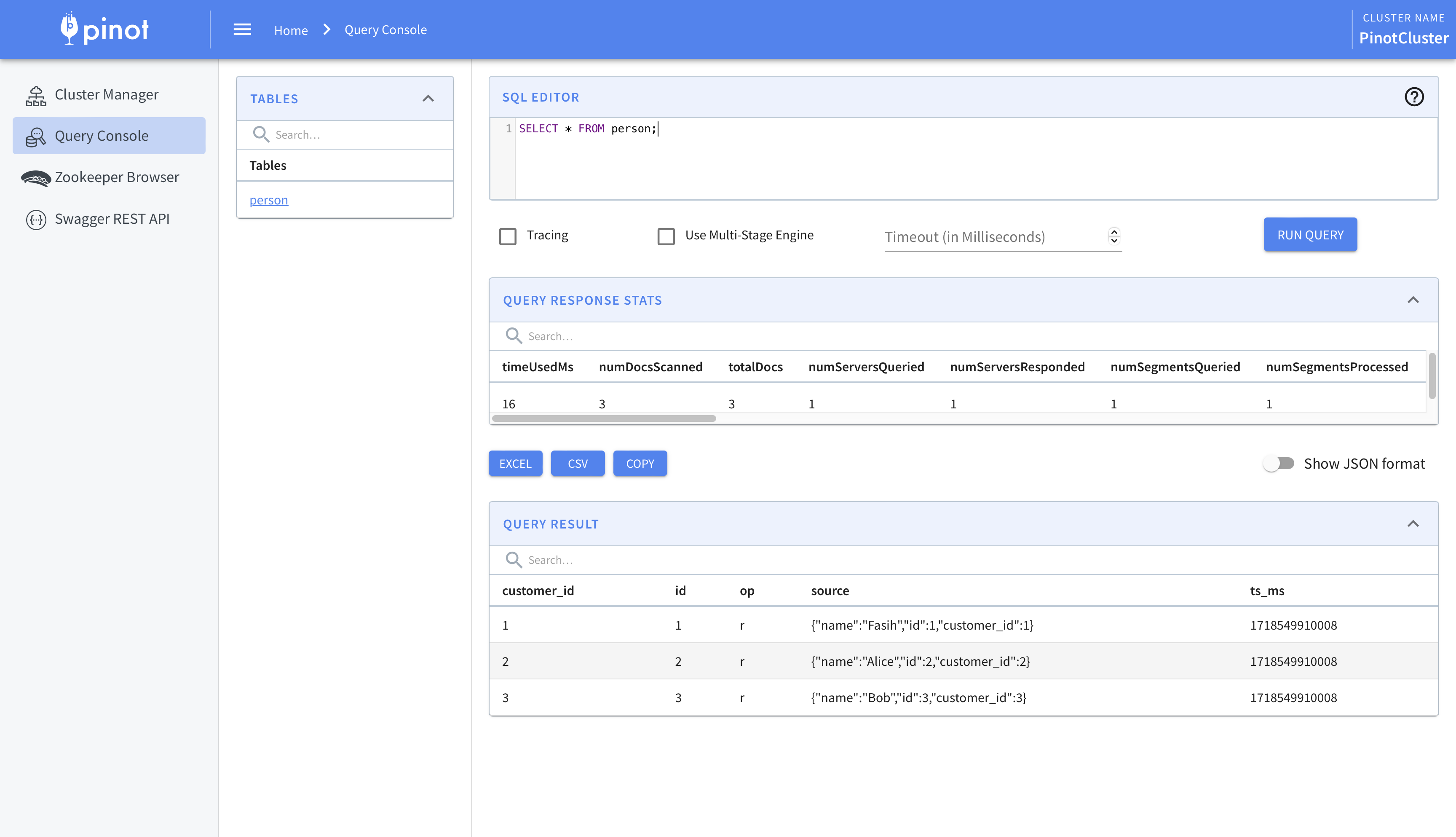

I’m persisting this data in a Postgres table and the create_tables fixture ensures that the tables are created before the test runs.

Upon running the test we find that it fails for two cases. The first case is when the input string contains a NULL character \x00. Postgres tables do not allow strings will NULL characters in them.

1 | ValueError: A string literal cannot contain NUL (0x00) characters. |

The second case is when the date of birth is in the future.

1 | AssertionError: assert 0 <= -1 |

To remedy the first failure, we’ll have to sanitize the name input string that gets stored in the table. We’ll create a helper function which removes any NULL characters from the string. This will be called before name gets saved in the table.

1 | def sanitize(s: str) -> str: |

To remedy the second failure, we’ll add an assertion ensuring that the age is less than or equal to 120. The updated create function is shown below.

1 | def create(name: str, dob: datetime.date) -> Person: |

We’ll update the test cases to reflect these changes. Let’s start by creating two variables that will hold the minimum and maximum dates allowed.

1 | MIN_DATE = datetime.date.today() - datetime.timedelta(days=Person.MAX_AGE * 365) |

Next, we’ll add a test to ensure that we raise an AssertionError when the string contains only NULL characters.

1 |

|

Next, we’ll add a test to ensure that dates cannot be in the future.

1 |

|

Similarly, we’ll add a test to ensure that dates cannot be more than 120 years in the past.

1 |

|

Finally, we’ll add a test to ensure that in all other cases, the function creates a Person instance as expected.

1 |

|

The tests pass when we rerun them so we can be sure that the function behaves as expected.

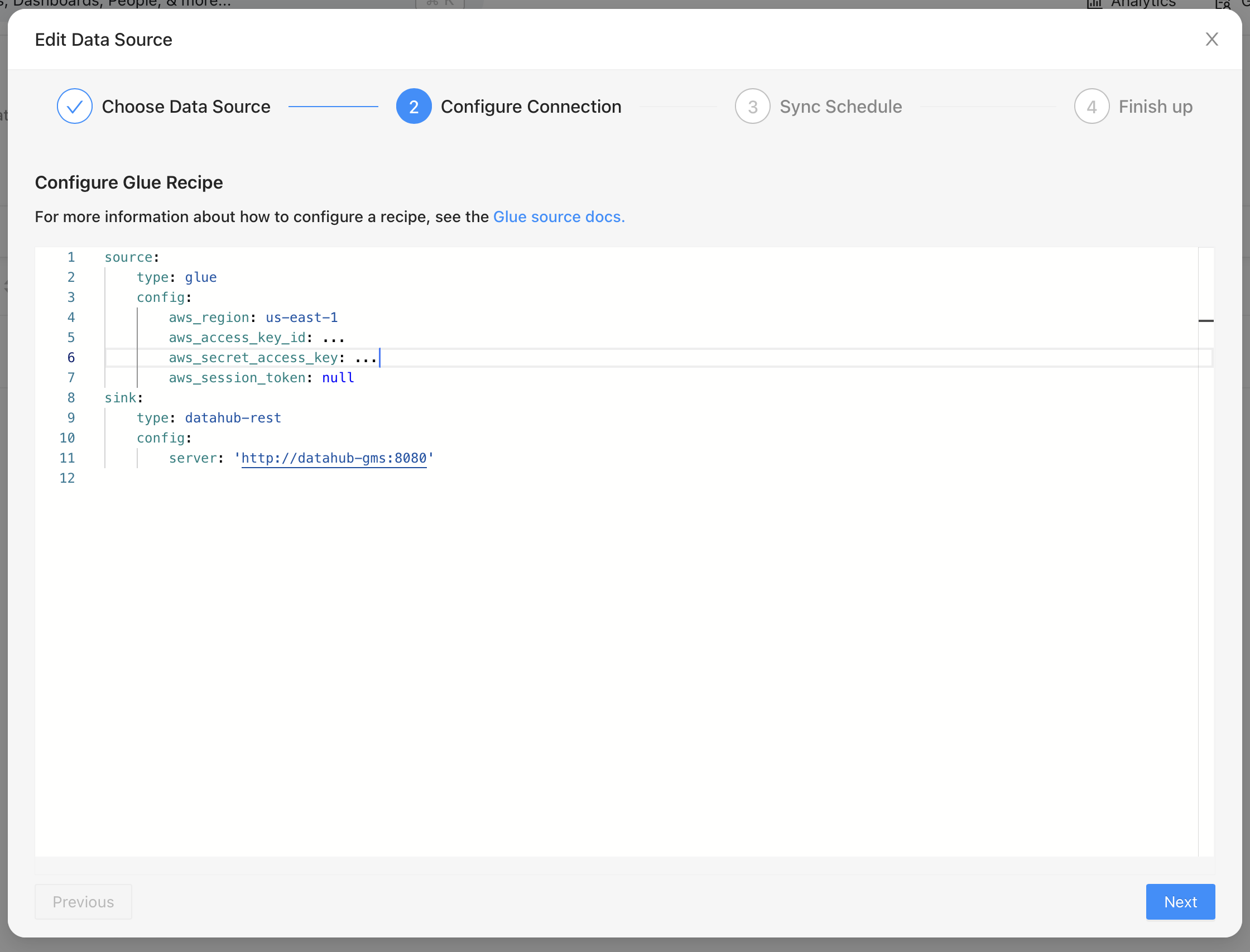

Testing a REST API.

Finally, we’ll look at testing a REST API. We’ll create a small Flask app with an endpoint which allows us to create Person instances. The API endpoint is a simple wrapper around the create helper function and returns the created Person instance as a dictionary.

1 |

|

We’ll add a test to generate random JSON dictionaries which we’ll pass as the body of the POST request. The test is given below.

1 |

|

Similar to the tests for create function, we test that the API returns a response successfully when the inputs are proper.

That’s it. That’s how we can leverage Hypothesis to test Python code. You’ll find the code for this post in the Github repository.