One of my favorite food delivery apps recently launched a feature where your friends can recommend dishes to you. This got me thinking. What if we could provide recommendations from more than just friends? What if recommendations came from the people around you as they browse restaurants and place orders? In this post we’ll take a look at how we can go about building such a system.

Getting Started

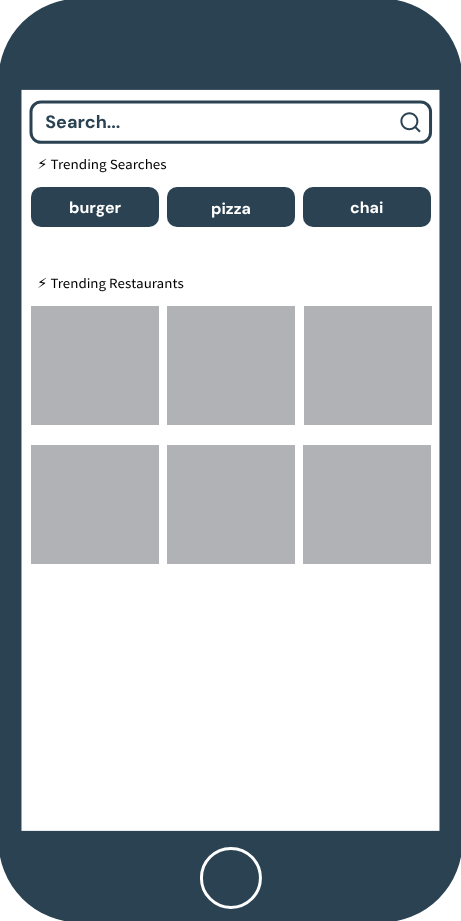

Imagine opening your favorite food delivery app and being presented with the trending searches and restaurants. For example, something like the mockup shown below. It shows three trending searches and a few trending restaurants. It turns out that by tracking user activity from search to purchase allows us to find out what’s gaining traction. Let’s design such a system.

We’ll begin by tracking a user’s journey from search to purchase.

Let’s say that the user begins their food ordering journey by searching for “pizza”. They’re presented with a list of restaurants that sell pizza. They click on a few restaurants, browse the menu, and finally add a large pizza to their cart from one of the restaurants. Finally, they place the order by making the payment. If we were to track each interaction of the user as an event, it would look something like this.

Search for “pizza”.

Present a list of restaurants.

Click on a restaurant.

Click on a restaurant.

Add pizza to cart.

Add a bottle of cola to cart.

Purchase.

As we collect more events, we’ll see patterns emerge. For example, we may discover that pizza is one of the trending searches since it’s being searched more often. Let’s see how we can do all of this programatically. It all starts with tracking the user’s search.

Let every search generated by the user be tagged by a unique identifier. This identifier, which we’ll call query_id, will be unique throughout the journey. Every new search will generate a new query_id but will stay unchanged for the same search. Similarly, we’ll track a user with a unique identifier called user_id. Let’s model the user’s search as an event as follows.

1 | { |

In this event, which is of type “query”, the user searched for “large pizza”. Similarly, we’ll model the results that were displayed to them as an event.

1 | { |

In this event, which is of type “results”, we see that the user was presented with two restaurants. Next, let’s model the user clicking on one of the restaurants.

1 | { |

In this event, which is of type “click”, the user clicked on the restaurant called “La Pizzeria”. Next, let’s model the user adding two items to their cart.

1 | { |

In the events, which are of type “add_to_cart”, we see that the user added two items to their cart. Finally, let’s model the purchase event.

1 | { |

In the events, which are of type “purchase”, we see that the user purchased the two items.

Having seen how to track user journey from search to purchase using events, let’s see how we can mine these events for patterns. Let’s write a simple Python script which demonstrates this using the events we’ve seen above.

1 | import datetime |

Running the script produces the following output.

1 | query item count |

In the output, we see that the query “large pizza” resulted in the purchase of one pizza and one bottle of cola.

What the script does is tie the purchase to its original query using the query_id. Finding the trending phrases and keywords is now simply a matter of grouping on the query.

1 | trending = metrics.groupby(["query"])["count"].sum().reset_index().sort_values(["count"], ascending=False) |

This gives us the following output which shows us that the query “large pizza” resulted in the purchase of two items.

1 | query count |

As we collect more events, we’ll see more query phrases emerge. These can then be presented to the user in the application. Similarly, tying the click events to queries will show us what query phrases bring the user to a particular restaurant. Finally, aggregating the click or purchase events will show us which restaurants are trending.

This small example shows how tracking user interactions with events can be used to find which query phrases are trending.

In a more production environment, and to make this real-time, events would have to be emitted to a log like Kafka and processed with a stream processing framework like Spark or Flink. Once these aggregated metrics are generated, they’d be written to some database from where they can be served to the user.

Finito.